Commentary by Cory Doctorow: Plausible Sentence Generators

I was surprised as anyone when I found myself accidentally using a large language model (that is, an “AI” chatbot) to write some prose for me. I was twice as surprised when I found myself impressed by what it wrote.

Last month, an airline stranded me overnight in New York City when my flight to LA was canceled due to an air traffic control snafu. The airline rep at the counter told me to try flying standby the next morning, and promised me that – while he didn’t have any vouchers for me – if I booked a hotel, the airline would reimburse me when I got home.

It wasn’t cheap or easy. Lots of us were stranded in New York that night. Every hotel room in Queens and Manhattan was taken, and the flophouse I found in Brooklyn had raised its prices to over $300/night. Add two $70 taxis, and I was into serious money.

So when I (finally) got home and the airline told me that they had a policy of not reimbursing fliers who were stranded by air traffic problems, I was pissed. When the airline rep held that position even after I explained that I’d been promised reimbursement by the airline, I was furious. I decided to so something I’d never done before – I was gonna take the airline to small claims court.

I googled “how do you sue an airline in small claims court” and found myself at a website that laid it out neatly. First, try to resolve the issue with the airline (check). Next, send a “final demand” letter. Finally, sue (more details follow). Did I want any help with that final demand letter?

I did! I wrote a draft letter. Now, I’ve worked for a campaigning law firm for 20 years, and I’ve seen my share of threatening lawyer letters in my day. I’ve even received many of these – from the owner of a cyber-arms dealer, from Gwyneth Paltrow’s lawyer, from lawyers representing the owners of LAX’s private luxury terminal, from Ralph Lauren’s lawyer, from the Sackler opioid family’s lawyers, and more. When you report on corruption among the rich and powerful, legal threats are just part of the job.

My legal threat letter was pretty good, if I do say so myself. Factual, crisp, and very stern. I pasted it into the form and clicked submit, not sure what would happen next. The webpage warned me that I might be in for a wait of two or three minutes.

When I came back to the tab a couple minutes later, I found that the site had fed my letter to a large language model (probably ChatGPT) and that it had been transformed into an eye-watering, bowel-loosening, vicious lawyer letter.

Hell, it scared me.

Let’s get one thing straight. This was a very good lawyer-letter, but it wasn’t good writing. Legal threat letters are typically verbose, obfuscated and supercilious (legal briefs are even worse: stilted and stiff and full of tortured syntax).

This letter read like a $600/hour paralegal working for a $1,500/hour white-shoe lawyer had drafted it. That’s what made it a good letter: it sent a signal, “The person who sent this letter is willing to spend $600 just to threaten you. They are seriously pissed, and willing to spend a lot of money to make sure you know it.” Like a cat’s tail standing on end or a dog’s hackles rising, the letter’s real point isn’t found in its text. The real point is the threat display itself.

Letters often constitute a signal that transcends their content. Take letters of reference that professors write for grad students: these are a fair bit of work, and the mere fact that a prof is willing to write one speaks volumes about their respect for the student under discussion.

A university professor friend of mine recently confessed that everyone in their department now outsources their letter-of-reference writing to ChatGPT. They feed the chatbot a few bullet points and it spits out a letter, which they lightly edit and sign.

Naturally enough, this is slowly but surely leading to a rise in the number of reference letters they’re asked to write. When a student knows that writing the letter is the work of a few seconds, they’re more willing to ask, and a prof is more willing to say yes (or rather, they feel more awkward about saying no).

The next step is obvious: as letters of reference proliferate, people who receive these letters will ask a chatbot to summarize them in a few bullet points, creating a lossy process where a few bullet points are inflated into pages of puffery by a bot, then deflated back to bullet points by another one.

But whatever signal remains after that process has run, it will lack one element: the signal that this letter was costly to produce, and therefore worthy of taking into consideration merely on that basis.

The same fate is doubtless coming for scary lawyer letters. Once it becomes well-known that these letters can be generated gratis by an anonymous robolawyer site, the mere existence of such a letter will no longer signal that the sender is willing to go to the mattresses for justice. It’ll just mean that they know how to use a search engine.

We’ve lived through this already. When I started my career in activism, groups like the one I work for were just starting to figure out how to use online forms to help people communicate with their elected representatives and with regulators seeking public comment. In those early years, lawmakers and regulators treated these letters as strong signals, equivalent to the letters that people used to write by going to the public library and asking a reference librarian help them figure out how to send a comment to an agency, or by rolling paper in their typewriters to fire a letter off to their elected rep (after first calling information to get the rep’s office number, then calling the office to get the address).

These letters were incredibly effective – for a little while. Then they weren’t – the recipients figured out that letters were a lot easier to send and discounted their importance. We countered by drumming up more interest, and then by building tools to help people call their reps and give them a piece of their minds. That, too, was effective and then waned as lawmakers discounted that signal, too.

Today, activists continue to innovate new ways to help people send an unmistakable signal to their governments: coordinating mass demonstrations and in-person meetings. SAG-AFTRA and Writers Guild members slog up and down the picket lines in my neighborhood every day, baking under a blazing heat-dome.

ChatGPT can take over a lot of tasks that, broadly speaking, boil down to “bullshitting.” It can write legal threats. If you need 2,000 words about “the first time I ate an egg” to go over your omelette recipe in order to make a search engine surface it, a chatbot’s got you. Looking to flood a review site with praise about your business, or complaints about your competitors? Easy. Letters of reference? No problem.

Bullshit begets bullshit, because no one wants to be bullshitted. In the bullshit wars, chatbots are weapons of mass destruction. None of this prose is good, none of it is really socially useful, but there’s demand for it. Ironically, the more bullshit there is, the more bullshit filters there are, and this requires still more bullshit to overcome it.

I don’t know if my robolawyer letter will get the airline to cough up the $439.85.

After all, I just FedExed the letter today. The chatbot told me to do that – FedExes send a signal, too, of course.

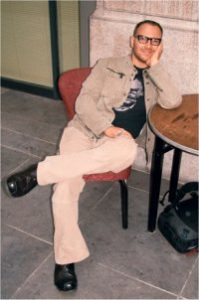

Cory Doctorow is the author of Walkaway, Little Brother, and Information Doesn’t Want to Be Free (among many others); he is the co-owner of Boing Boing, a special consultant to the Electronic Frontier Foundation, a visiting professor of Computer Science at the Open University and an MIT Media Lab Research Affiliate.

All opinions expressed by commentators are solely their own and do not reflect the opinions of Locus.

This article and more like it in the September 2023 issue of Locus.

While you are here, please take a moment to support Locus with a one-time or recurring donation. We rely on reader donations to keep the magazine and site going, and would like to keep the site paywall free, but WE NEED YOUR FINANCIAL SUPPORT to continue quality coverage of the science fiction and fantasy field.

While you are here, please take a moment to support Locus with a one-time or recurring donation. We rely on reader donations to keep the magazine and site going, and would like to keep the site paywall free, but WE NEED YOUR FINANCIAL SUPPORT to continue quality coverage of the science fiction and fantasy field.

©Locus Magazine. Copyrighted material may not be republished without permission of LSFF.

Any theory that forecasts a race to the bottom is presumptively valid. Because, dang, humanity likes nothing better than omnivorous, highly destructive competition. As long as it’s called “innovation”. //side-eye emoji//

Can we get an update please? I want to know if you got your money back.